Your cart is currently empty!

Month: November 2020

-

Open source AI chips making Green Waves: Bringing energy efficiency to IoT architecture

What if machine learning applications on the edge were possible, pushing the limits of size and energy efficiency? GreenWaves is doing this, based on an open-source parallel ultra low power microprocessor architecture. Though it’s early days, implications for IoT architecture and energy efficiency could be dramatic. The benefits open source offers in terms of innovation…

-

From big data to AI: Where are we now, and what is the road forward?

It took AI just a couple of years to go from undercurrent to mainstream. But despite rapid progress on many fronts, AI still is something few understand and fewer yet can master. Here are some pointers on how to make it work for you, regardless of where you are in your AI journey. In 2016,…

-

Human in the loop: Machine learning and AI for the people

HITL is a mix and match approach that may help make ML both more efficient and approachable. Paco Nathan is a unicorn. It’s a cliche, but gets the point across for someone who is equally versed in discussing AI with White House officials and Microsoft product managers, working on big data pipelines and organizing and…

-

Human in the loop: Machine learning and AI for the people

HITL is a mix and match approach that may help make ML both more efficient and approachable. Paco Nathan is a unicorn. It’s a cliche, but gets the point across for someone who is equally versed in discussing AI with White House officials and Microsoft product managers, working on big data pipelines and organizing and…

-

Water data is the new oil: Using data to preserve water

Data and water do mix, apparently. Using data and analytics can lead to great benefits in water preservation. We’ve all heard data is the new oil a thousand times by now. Arguably though, we can all live without data, or even oil, but there’s one thing we can’t do without: Water. Preserving water and catering…

-

Artificial intelligence in the real world: What can it actually do?

How the cloud enables the AI revolution AI is mainstream these days. The attention it gets and the feelings it provokes cover the whole gamut: from hands-on technical to business, from social science to pop culture, and from pragmatism to awe and bewilderment. Data and analytics are a prerequisite and an enabler for AI, and…

-

Artificial intelligence in the real world: What can it actually do?

How the cloud enables the AI revolution AI is mainstream these days. The attention it gets and the feelings it provokes cover the whole gamut: from hands-on technical to business, from social science to pop culture, and from pragmatism to awe and bewilderment. Data and analytics are a prerequisite and an enabler for AI, and…

-

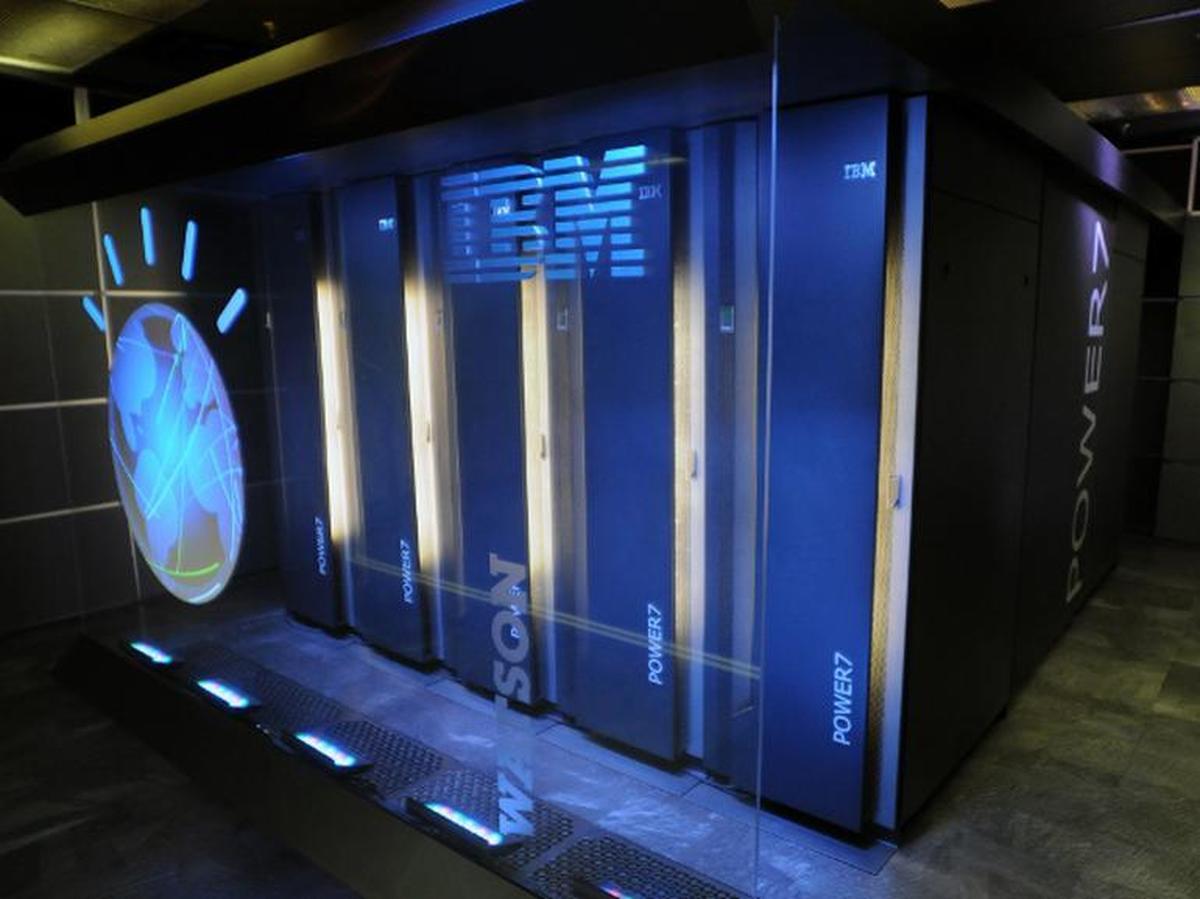

IBM’s Watson does healthcare: Data as the foundation for cognitive systems for population health

Watson is IBM’s big bet on AI, and healthcare is a prime domain for present and future applications. We take an inside look at Watson, why and how it can benefit healthcare, and what kind of data is used by whom in this process. IBM’s big bet on Watson is all over the news. This…

-

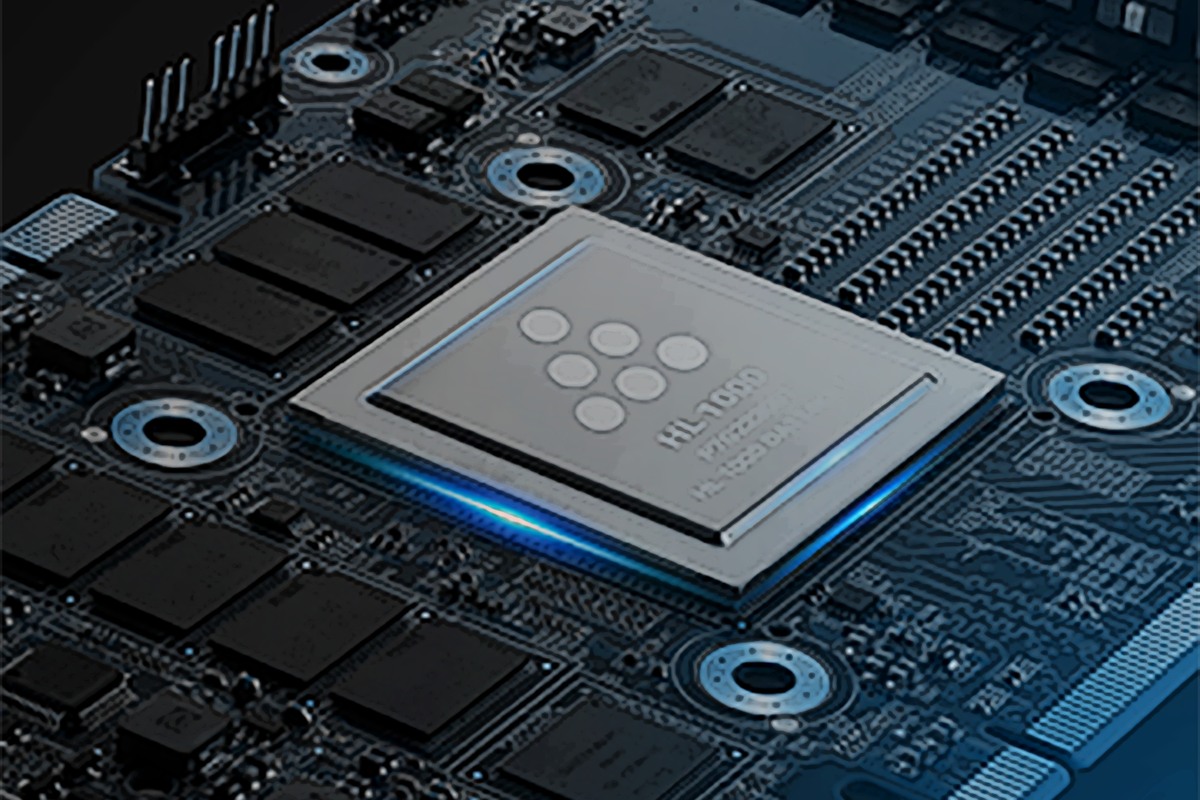

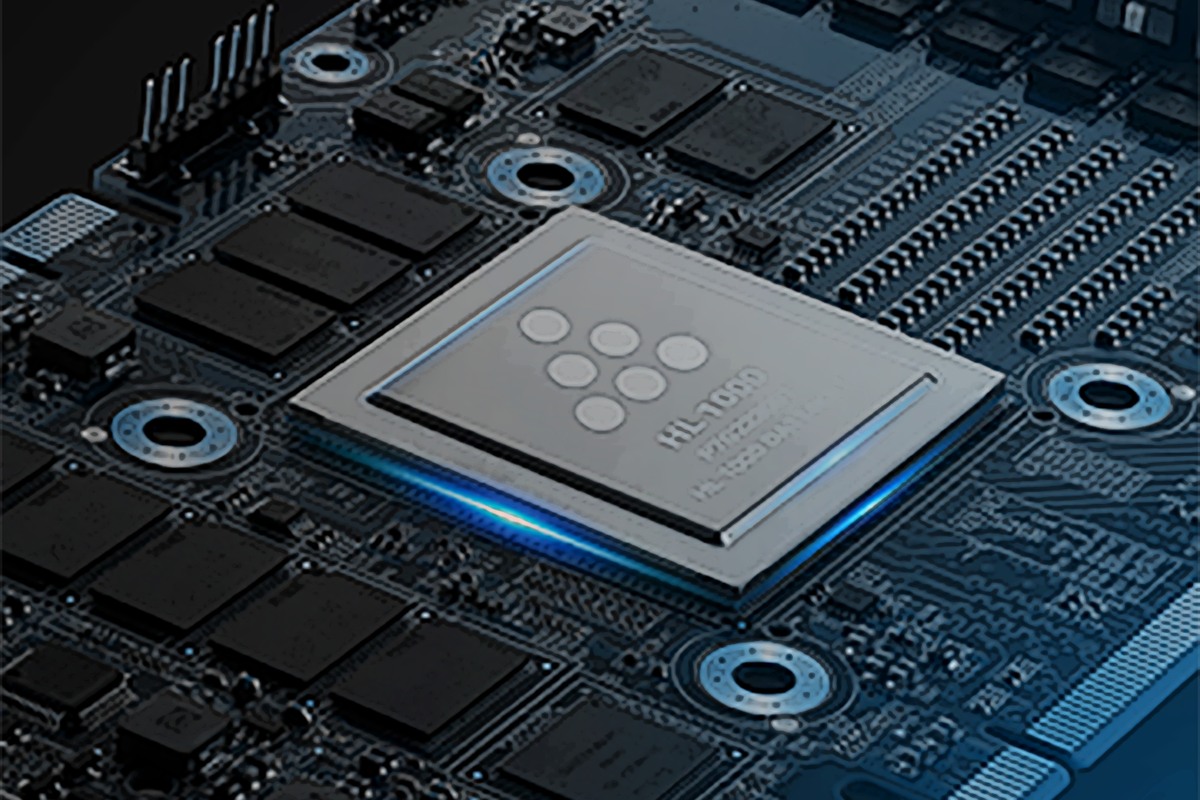

Habana, the AI chip innovator, promises top performance and efficiency

Habana is the best kept secret in AI chips. Designed from the ground up for machine learning workloads, it promises superior performance combined with power efficiency to revolutionize everything from data centers in the cloud to autonomous cars. As data generation and accumulation accelerates, we’ve reached a tipping point where using machine learning just works.…

-

Habana, the AI chip innovator, promises top performance and efficiency

Habana is the best kept secret in AI chips. Designed from the ground up for machine learning workloads, it promises superior performance combined with power efficiency to revolutionize everything from data centers in the cloud to autonomous cars. As data generation and accumulation accelerates, we’ve reached a tipping point where using machine learning just works.…